The techniques that I use in this tutorial are:

After that you can upload to your shared hosting.

DRUPAL AWS S3 DOWNLOAD

Pls download it to your local host and configure it there.

DRUPAL AWS S3 INSTALL

So if your site is on a shared hosting, you are not able to install and execute them. You will need to run several client programs like drush and awscli.

DRUPAL AWS S3 HOW TO

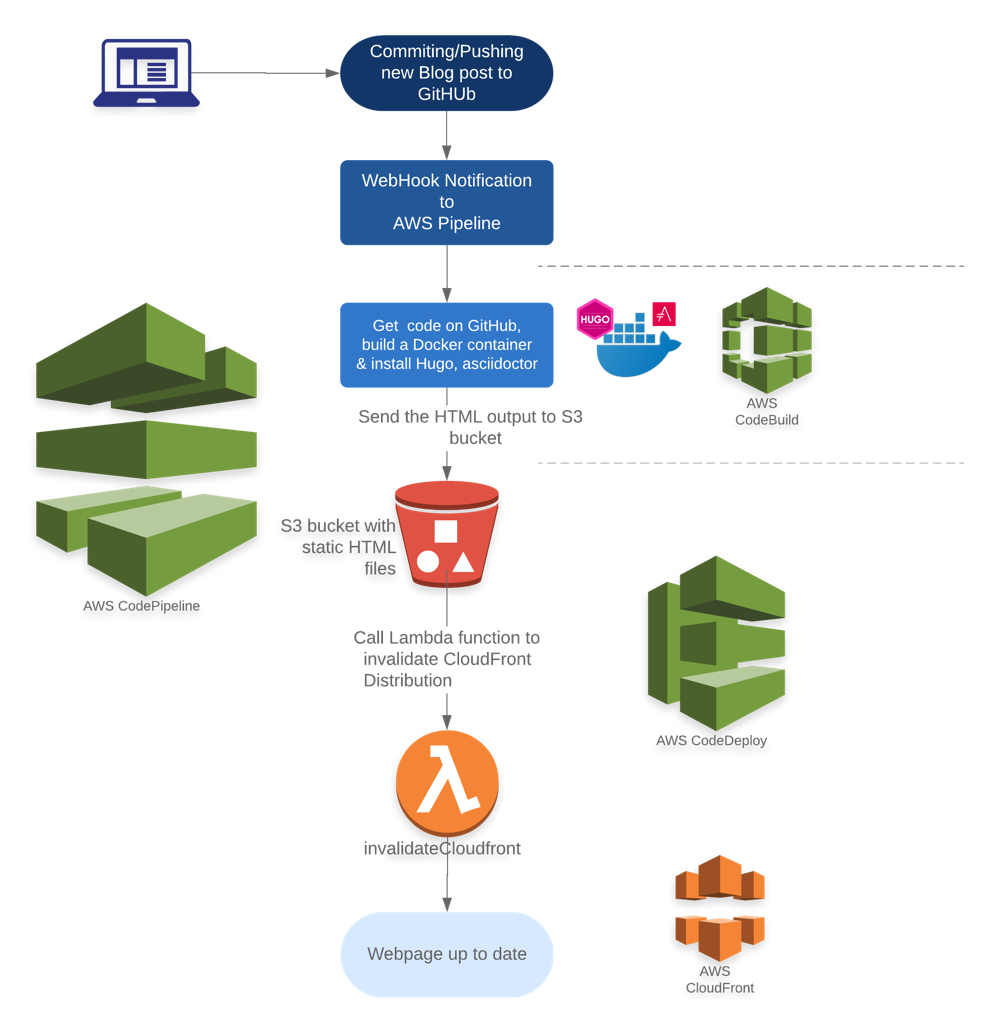

In this tutorial, we will show you how to convert the Drupal 7 & 8 file system to Amazon S3 and sync all existing files to S3 Storage. As customers are from all over the world, saving the multimedia content to S3 will let them access them much faster.

I wanted a solution that ran outside of Drupal and would work for any type of site. Why I didn't use drush or backup_migrate? Let me know if you have any improvements.

DRUPAL AWS S3 CODE

One thing to note is that I first backup the table structures and then the contents so that I don't get the contents of cache tables (Couldn't find the article where I got that code so if you add a comment below I'll add in a link to it). That's it! The bash script should be fairly straightforward and easy to modify if you want to. S3cmd put $TMP/$ $S3_BUCKET/sites/$BASE-$ S3cmd put $TMP/$DB-$DATE.gz $S3_BUCKET/databases/$DB-$DATE.gz įor DIR in $(find "$SITES_DIR" -mindepth 1 -maxdepth 1 -type d) Mysqldump -complete-insert -disable-keys -single-transaction -no-create-info -user=$DB_USER -password=$DB_PASSWD $DB $TABLES2 > $TMP/$DB-$DATE TABLES2=`echo "$TABLES" | grep -Ev "^(accesslog|cache_.*|flood|search_.*|semaphore|sessions|watchdog)$"` #Then dump the data, except for cache and temporary tables. Mysqldump -complete-insert -disable-keys -single-transaction -no-data -user=$DB_USER -password=$DB_PASSWD -opt $DB $TABLES > $TMP/$DB-$DATE

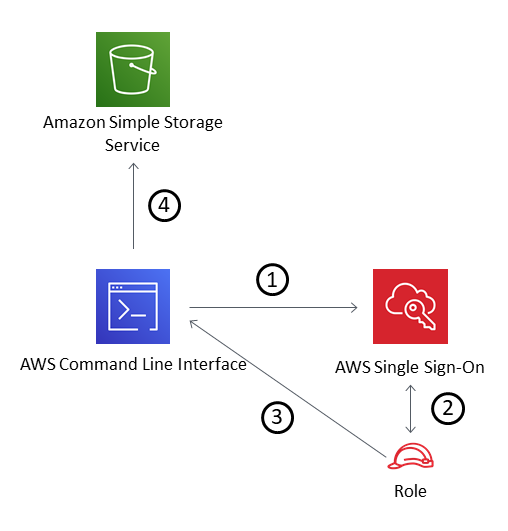

TABLES=`mysql -skip-column-names -e 'show tables' -user=$` Create a file that will be run daily with cron that contains:įor DB in $(mysql -user=$DB_USER -password=$DB_PASSWD -e 'show databases' -s -skip-column-names|grep -Ev "^(information_schema|performance_schema|mysql)$") Encrypting sensitive stuff, however, is never a bad idea.Īt this point, your command line should be set up to send files to S3. By default, your data should be safe unless you make it public. Use this if you are very worried about security. This is separate from the access keys and will be used to encrypt the data before it is sent to S3. You can also optionally enter a GPG encryption key. This will store the keys in a config file so that your server can access your S3 account. Then run s3cmd -configure from the command line of your server and enter your Access and Secret keys. You can get more information along with other install methods at If you are on Ubuntu, it is in the apt repo so just type apt-get install s3cmd. Next, install s3cmd which is a command line tool for sending files to S3.

Glacier is far cheaper than S3 but takes 4-5 hours to recover. For now mine are to send to glacier after 7 days and then delete after 3 months. For the bucket, go to the properties and set the lifecycle rules however you want. Follow their instructions for how to do this if you don't know how. This can be done from your account Security Credentials page. All I needed was a script to send the backup files to Amazon S3 every night.įirst, sign up with Amazon S3 and generate an Access and Secret keys. This removed the need for the backup script to handle the deletion logic. It also had the ability to set up lifecycle rules so that as files got older, I could move them to glacier or delete them. I decided on S3 because it was easy, fast and cheap. Only backup the contents of tables that are not cache tables. Backup all sites and databases on the server individually.ģ. In that regard, I recently wrote a backup script to back up a server with multiple sites to Amazon S3.ġ. While there are many out there, often times they boil down to a script. As part of setting up servers, we need to set up a backup system.

0 kommentar(er)

0 kommentar(er)